COMPANY

Procore Technologies / Macro UX Benchmarks

MY ROLE

Research methods of macro UX benchmarks, research, advocate for universal UX benchmarks, train & document methods for org

TEAMMATES

UXR Coach: Zaira Tomayeva / UX Nudgers: Joshua Morris, Annahita Varahrami, Nick Murphy

SUMMARY

Procore’s Quality & Safety tools had issues with churn, and we were uncertain about the cause. Some thought the tools were too hard to use, but was this an assumption? Was it all the tools or just one? Or an inconvenient truth? I sought to set macro level benchmarks we could research quickly, and sus out the truth

PROBLEM / CHALLENGE

“We’re having issues with churn because the Quality and Safety tools are hard to use.” How might we evaluate this assumption quickly and improve?

GOALS

🧑🌾

Dig into assumptions

📊

Secure benchmarks

⏩

Execute quickly

📋

Prioritize improvements

WHAT'S ALL THE SUS ABOUT?

SETTLING ON A HIGH LEVEL BENCHMARK

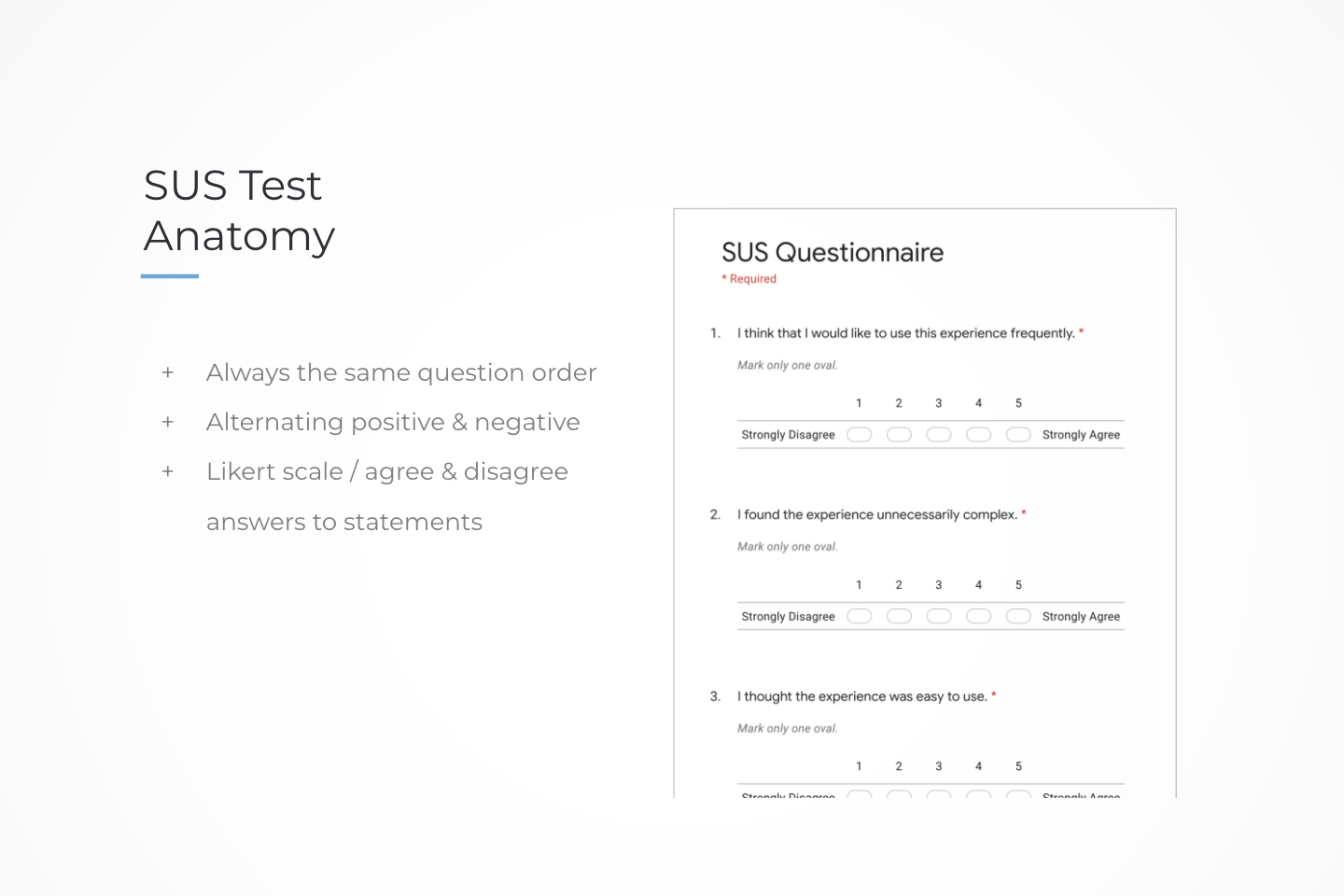

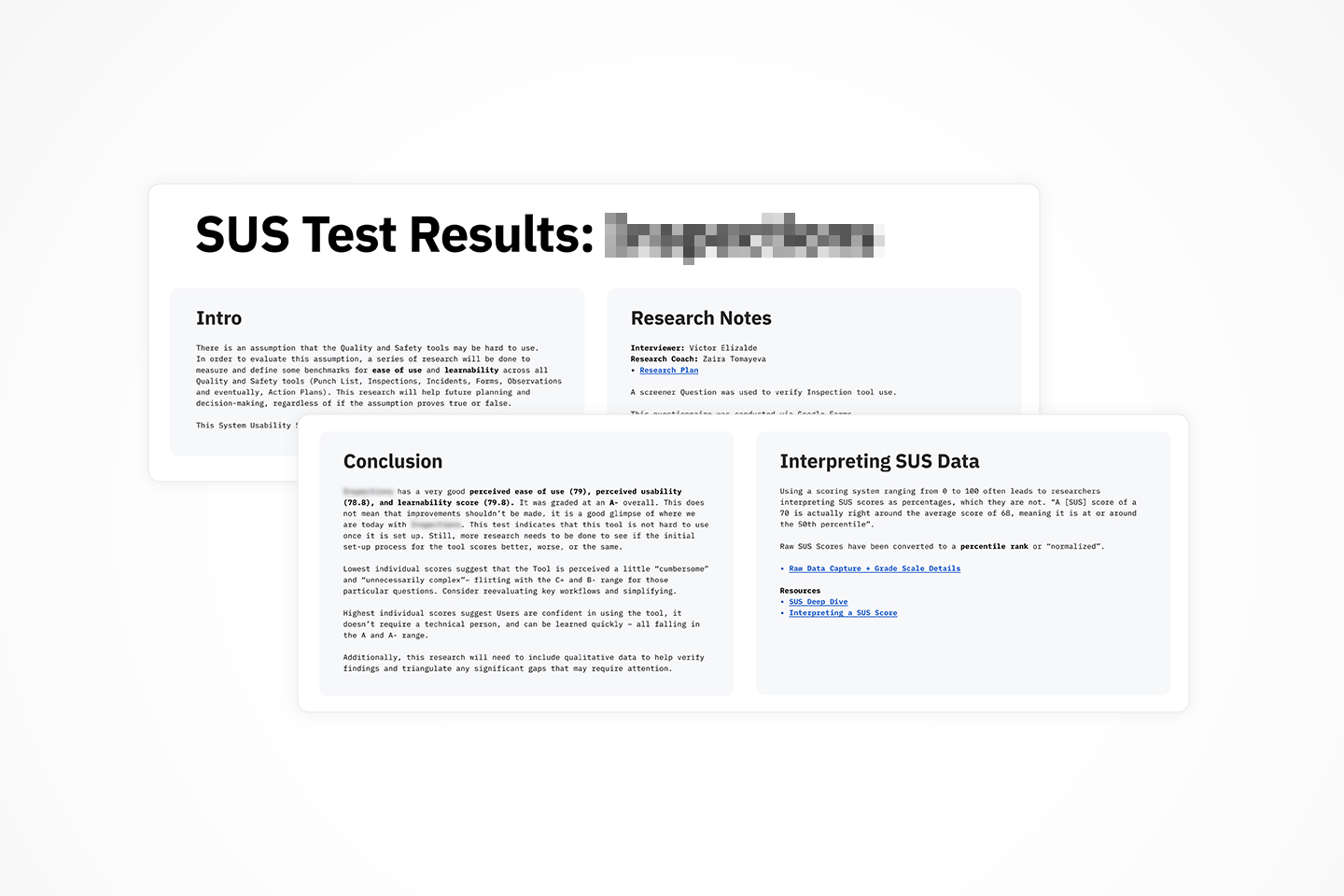

After some digging, I found a research method that was fast to execute, synthesize, and would provide high-level state of usability and learnability.

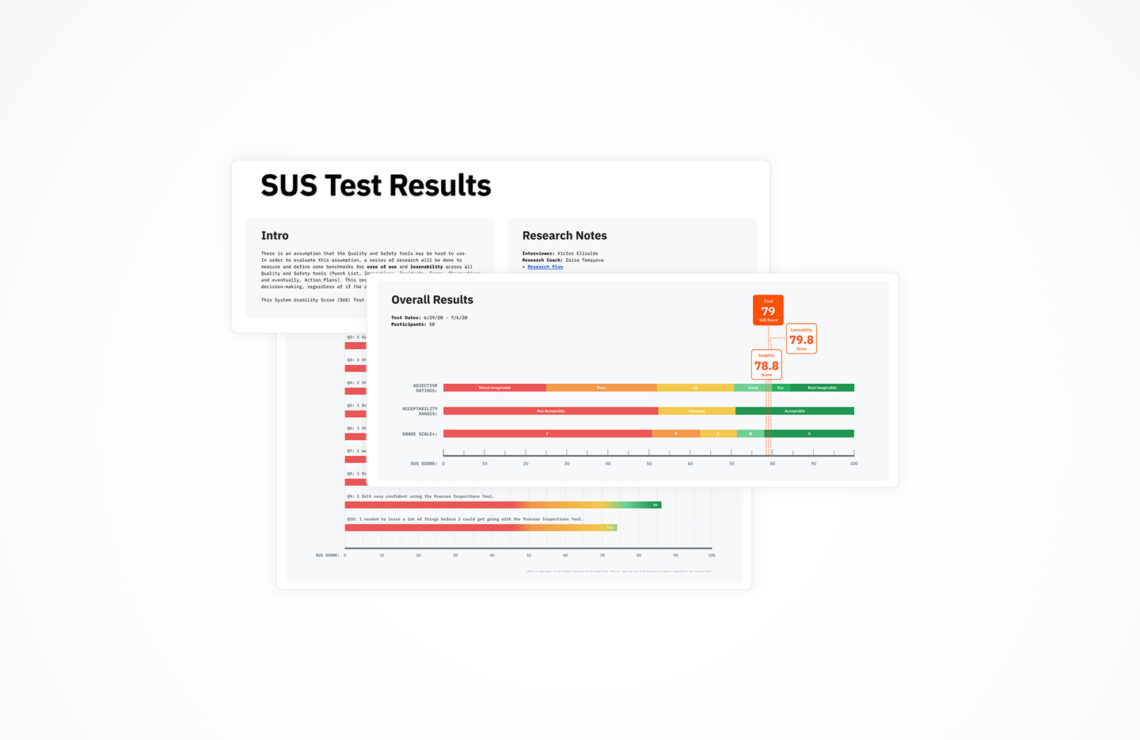

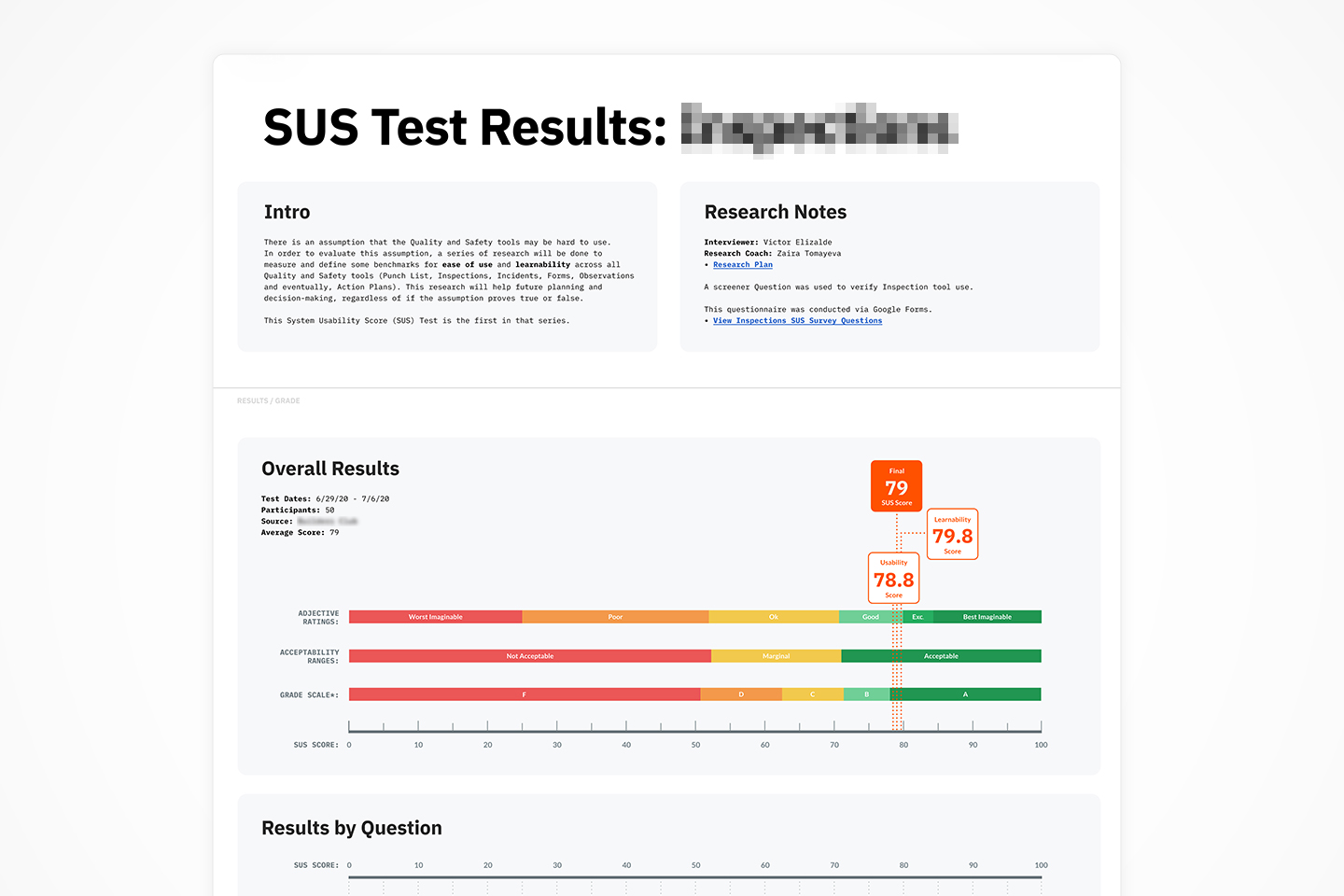

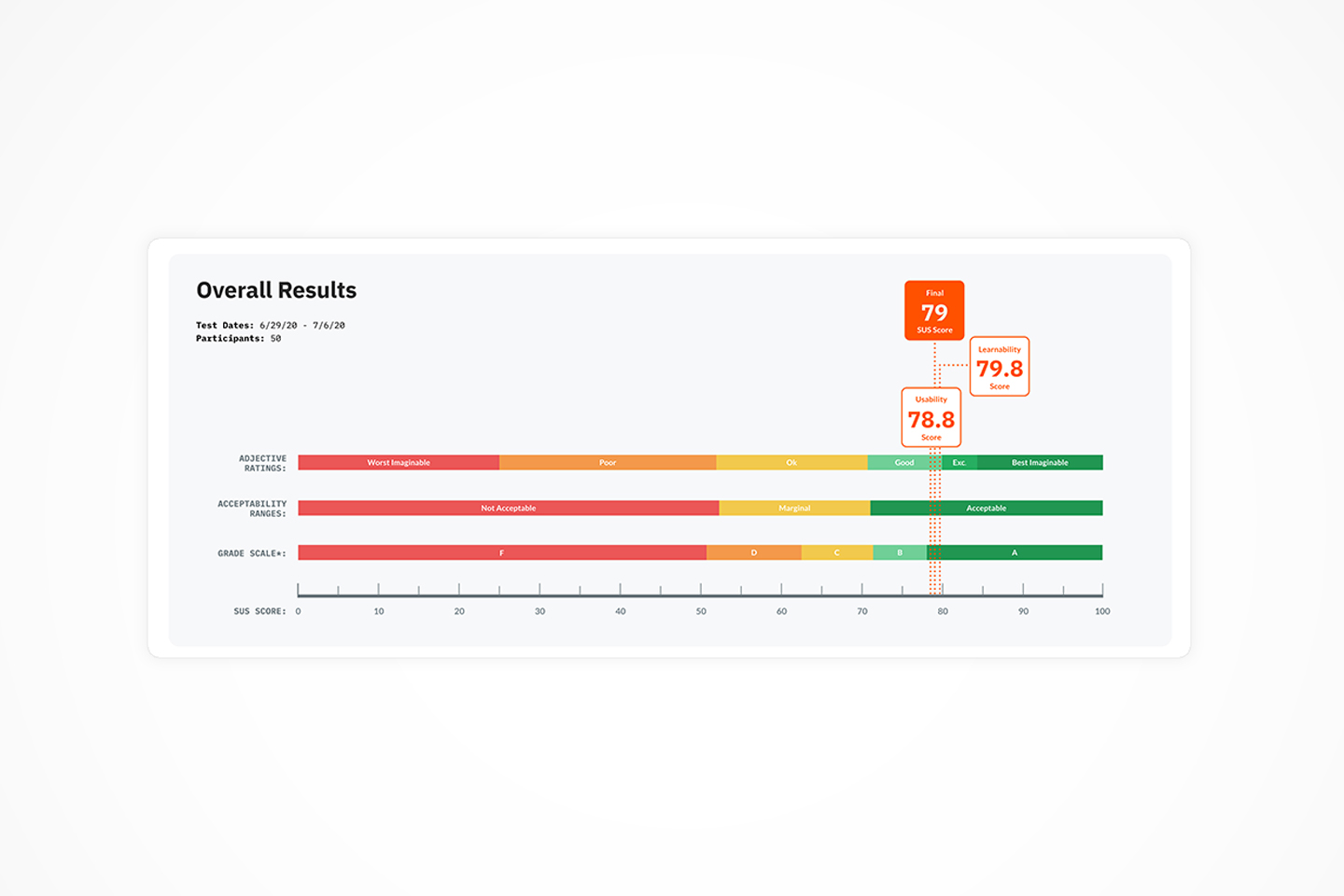

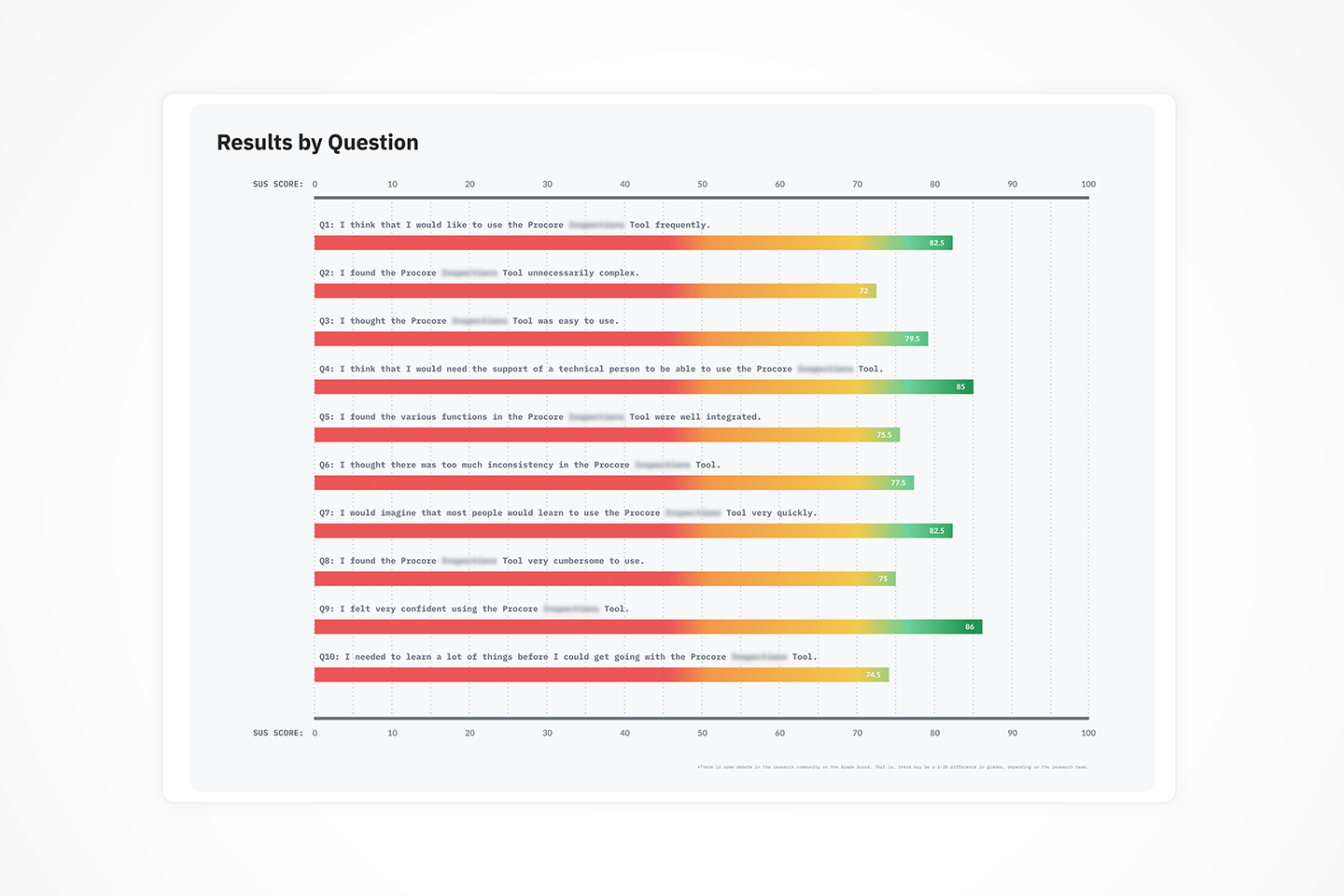

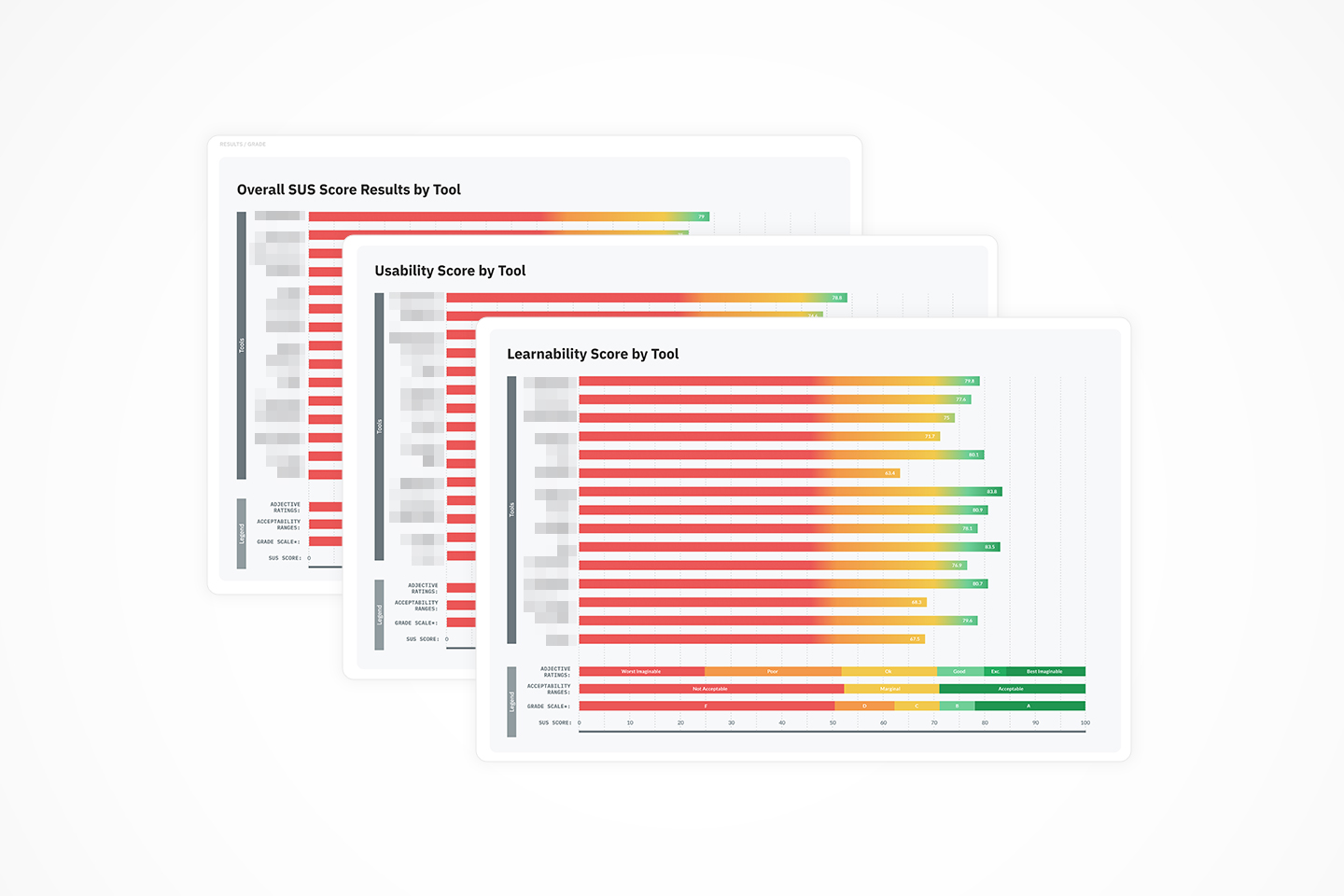

DATA VISUALIZATION

After conducting and synthesizing the SUS test on the first tool in Quality & Safety, we wanted to find a way to make this research easily digestible in meetings, slack, and readouts. This meant making it visually pleasing and scannable.

USING SUS ACROSS A TOOLSET & CATEGORY

Once all tools in Quality & Safety were evaluated, we expanded it to another, related category of tools. This allowed us to benchmark across more of the Procore platform. It gave us a way to focus on one or two worst performing tools, instead of boiling the ocean.

SPREADING TO THE MASSES

I took this project many steps forward and became an advocate to continue to set UX baselines across the platform. I worked with product and all of UX to present at lunch and learns about macro benchmarks, using SUS as one example. I also documented a SUS Kit in confluence so product and UX could run these on their own with templates and video explanations.

HOW WE DID

📊

Empowered teams to measure w/UX benchmarks

📈

Served as a reminder to stay data-driven

📌

Helped prioritize usability gaps

🚪

Lowered the bar to entry

REFLECTIONS

This was a good learning experience for the type of UX benchmarks that are available. I would not have gotten this far without my research coach, Zaira Tomayeva. I also learned that there is no silver bullet when making considerations about how to evaluate the health of a tool at a macro and micro level.

I was excited to learn new methods of evaluating the health of a tool and looking for ways to have universal benchmarks across a very large platform (20+ tools!). That said, if I did it all over again, I would get product involved a little sooner so perhaps it could be part of a larger story of all the metrics (qual and quant) that could be combined to tell one story.